Hello,

The Medical Device Regulation and In-Vitro Device Regulation have been published the 5th May 2017!

See the Official Journal of the EU.

MDR and IVDR published

Cybersecurity in medical devices - Part 3 AAMI TIR57:2016

After a long pause, we continue this series about cybersecurity in medical devices with a discussion on AAMI TIR57:2016 Principles for medical device security — Risk management.

This TIR is the one and only document available in the corpus on medical device-related standards and guidances, dealing with the application of cybersecurity principles and their impact on ISO 14971 risk management process. Yet, FDA guidances exist, but they’re not as direct as this TIR to show the impact of cybersecurity management process on risk management process.

Structure of AAMI TIR57:2016

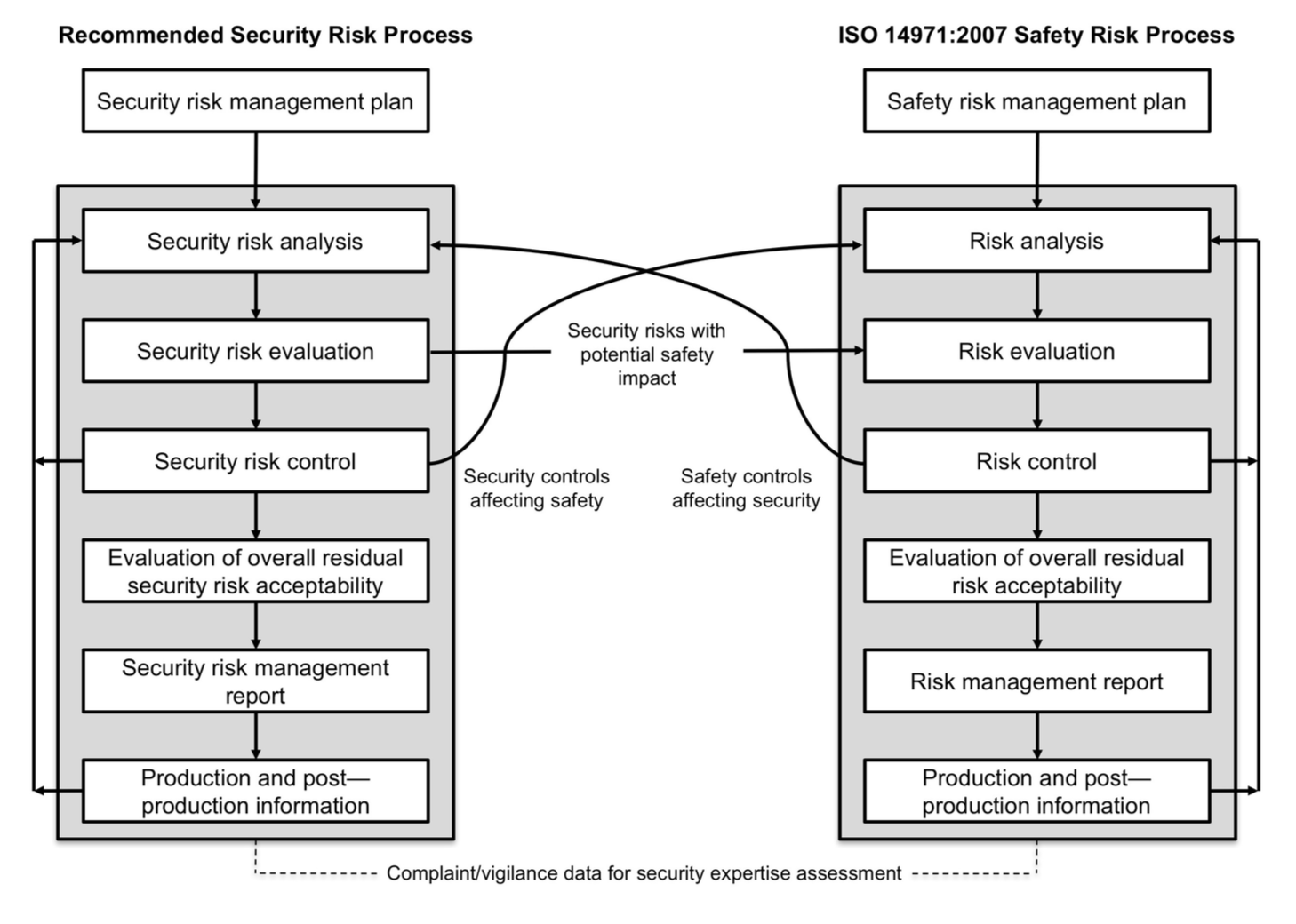

The structure of sections 3 to 9 of this document is copied from ISO 14971 standard. It shows how the security risk management process can be modeled in a manner similar to the safety risk management process.

If you’re at ease with ISO 14971, you’ll be at ease with AAMI TIR57. Your effort of understanding will be focused on security risk management concepts, not the process (risk identification, evaluation, mitigation and so on…) that you already know.

We can bless the working group in charge of this TIR for this effort of presentation. The diagram below, extracted from the TIR, shows the analogy between both processes.

You've probably already seen this diagram elsewhere. And I bet that many articles or newsletters dealing cybersecurity in medical devices are going to reproduce it as well!

Separation of processes

The TIR recommends to separate the security and safety risk management processes. This looks as a good recommendation, as people involved in both processes are not all the same. Data security and clinical safety concepts are not managed by the same persons with the same qualifications.

Cons

This separation is going to remain very theoretical for most of small businesses. Practically, big companies can afford maintaining two processes and the needed qualified persons, but small businesses don’t.

Small businesses will require the help of security experts to manage security risks. But they will be most probably hired as consultants. People who currently manage ISO 14971 process will manage both processes, without clear separation on a day to day basis.

Pros

The separation of processes is a good way to monitor efficiently security risk management. Keeping a security risk management file separated form the safety risk management file allows to document the outputs of the process without mixing concepts of security risk management (threats, vulnerabilities, assets, adverse impacts), with concepts of safety risk management (hazardous phenomenon, hazardous situation, hazard, harm).

Annexes of AMMI TIR:57:2016

The annexes found after section 9 are another wealth of information. They contain practical methods to implement a security risk management process. There is even a list of questions that can be used to identify medical device security characteristics, like the annex C of ISO 14971 for safety risks!

The TIR ends with an example based on a fictional medical device: the kidneato system, with everything that a manufacturer shouldn’t do: an implanted part, a part connected to the public network, and a server storing medical data. Delicatessen for hackers!

Multiplication of risk management files

With the ongoing implementation of ISO 13485:2016 by medical device manufacturers, another consequence of security risk management is the multiplication of risk management files:

- QMS Process risk management,

- Safety risk management,

- Security risk management, and

- Risk / opportunities management, for the fans of ISO 9001:2015.

We can even try to draw a table of interactions between these processes (I skip ISO 9001:2015, but don’t conclude I’m not a fan :-))

| Affects | Safety | QMS Process | Security | |

| Risks | ||||

| Safety | X | Safety risk mitigations implemented in QMS processes | Safety risks with potential impact on security. Safety controls affecting security | |

| QMS Process | Hazardous situations in QMS processes with impact on safety. QMS process controls affecting safety | X | QMS process risk controls affecting security. Threats, vulnerabilities, assets found in processes to assess in security risk management | |

| Security | Security risks with impact on safety, Security controls affecting safety | Security risks with impacts on QMS processes (and possibly safety). Security controls affecting QMS processes | X | |

Conclusion

AAMI TIR75:2016 is an excellent source of information for newbies in security risk management. A must-read for people not qualified in security risk management, who need to manage the process, and anticipate its impact on other processes.

In the next article, we’ll see the consequence of cybersecurity management on software development and maintenance processes.

They've raised the bar!

If you are a regular visitor of this blog, you noticed that almost three months elapsed between the last two articles on cybersecurity.

That's not what I planned.

The time dedicated to this blog was totally swallowed by the other facets of my job. Namely filling the gap between the current level of compliance of manufacturers, and the new expectations of notified bodies and regulatory authorities in the European Union. The bar has been raised!

It gives you a sense of what we're getting into with the new MDR.

Cybersecurity in medical devices - Part 4 Impact on Software Development Process

We continue this series of posts on cybersecurity with some comments on impacts of cybersecurity on the software development documentation.

IEC 62304 class A software vs cybersecurity

IEC 62304 defines a rather light set of constraints for class A software. Many connected objects or back-office servers processing medical data are low risk devices (when they’re qualified of medical devices). If we put apart the case of devices used for close-loop, or remote monitoring (or the like), most of standalone software in back-office (services) or used remotely (standalone web apps or mobile apps) will present failures with negligible consequences for the patient. As such, most of standalone software will be in class A (a conclusion that I verified empirically).

Cybersecurity documentation requirements

For class A software, it’s usual to do the bare minimum to be compliant: software requirements, functional tests, a bit of risk assessment with acceptable risk prior to mitigation actions, a bit of SOUP management, and voilà! It won’t be enough to prove that standalone medical device software is secure.

Thus, cybersecurity requires to bring additional documentation: security risk assessment, mitigation actions, and evidence of their effective implementation. Where are we going to find these evidences?

In software requirements, of course, but also in architectural design, possibly in detailed design, and in unit/integration/verification tests. This sounds more like class B than class A.

Special tests for cybersecurity

More, we can mimic the section 5.5.4 of IEC 62304, applicable to class C only, by including additional cybersecurity tests requirements like application of OWASP 13 Top-10 to verify good coding practices. For this kind of tests, tools like static analyzers or methods like peer code reviews are usually implemented. These tools and methods are more frequent for class C software development, than for class B and A.

Summary

To sum-up, we have the following cases:

| Type | SW embedded in MD (contributes to essential performance) | Standalone SW (diagnosis / treatment intended use) | SW embedded in MD (doesn't contribute to essential performance) | Standalone SW (no diagnosis / treatment intended use) |

| Usual SW safety class | C or B | C or B | A | A |

| Connected? | Usually no, but BTLE is appealing! | Usually yes, on PC or mobile connected to HCP or public network | Yes with BTLE or Wifi connected to HCP or public network | Usually yes, on PC or mobile connected to HCP or public network |

| Cybersecurity overhead | Null if not connected (beware of USB). Very high if connected, to prove that security breach won’t result in the degradation of essential performance. | Limited. Security breach will mostly result in data loss, rarely in non-serious harm (i.e. significant delay in diagnosis) | Null if not connected. Important if connected (data loss only, but class A documentation is not detailed enough) | Important if connected (data loss only, but class A documentation is not detailed enough) |

This comparison of different cases shows that we have a paradox:

- High safety risk devices will require a limited cybersecurity overhead. If essential performance relies on software, device connectivity will be limited. The cybersecurity documentation will represent a limited amount of work, compared to device safety documentation.

- Low safety risk devices will require a significant cybersecurity overhead. Software safety documentation is poor, thus the cybersecurity overhead will represent a significant amount of work, compared to device safety documentation.

This comparison is not valid for high-risk devices designed for connectivity, like an hypothetical close-loop system, which loop goes through a remote server. It's the worst case, reserved to manufacturers with enough resources to support the design and maintenance of such device.

Conclusion

Connecting a medical device to a network is not trivial, especially if that network is not a controlled network, like public or home network. The cybersecurity requirements established by the FDA guidances in the US, and in the new Medical Device Regulation in the EU have significant consequences on the cost of documentation to bring evidences of compliance.

The cost is significant for IEC 62304 class A software, which requires documentation closer to class B than class A.

Wait, but what of harmonized standards?

While the FDA continues to update periodically and reliably the list of recognized standards (last update in August 2017), the European Commission hasn't updated the list of harmonized standards since may 2016.

FDA recognized standards

The update of FDA recognized standards in August 2017 brought to the looong list of standards:

- ANSI UL 2900-1 on cybersecurity (will be analyzed in a further post),

- IEC 82304-1 on health software,

to say the least, just focusing on software as a medical device.

We can also check and verify on the FDA database of recognized standards that IEC 62304 amendment 1 2015 was recognized in April 2016, and IEC 62366-1 2015 was recognized in June 2016.

EU harmonized standards

On the other side of the Atlantic Ocean, things are less ... clear.

While standards published by the ISO, IEC or other international organizations continue to evolve, the list of harmonized standards still references "old" standards:

- For software: IEC 62304:2006, no 62304 2015 or 82304 in sight,

- For usability: IEC 62366:2008, no 2015 in sight,

- For general standards ISO 13485:2012 (doh!),

- Fortunately ISO 14971 hasn't evolved yet (phew!),

- For embedded software, old versions of IEC 60601-1-x, and IEC 60601-2-x collateral still referencing IEC 60601-1 2nd version,

to say the least.

I heard that more than a hundred of standards wait for being harmonized (this sounds likely, but I don't have the source and hope I'm not spreading fake news).

The current list is getting older and older. The toughest element is that all manufacturers are switching or have already switched to ISO 13485:2016.

Hey, European Commission, this list passed its expiry date! What do we do now?

Recommendations of Notified Bodies

Fortunately (or strangely, or ironically, or ... choose your positive or negative adverb), Notified Bodies have the solution:

Disregard the current list of harmonized standards and consider the last versions of standards as state-of-the-art.

This recommendation was given by two different notified bodies to manufacturers I work with.

So, still focusing on standalone software, you can apply the following standards:

- IEC 62304 Amd1:2015,

- IEC 62366-1:2015,

- and even IEC 82304-1:2016.

For embedded software, I can't imagine the confusion caused by the application of the latest versions not present in the list of harmonized standards. It's a very good idea to consult your notified body before applying the latest versions of the IEC 60601-x-y family.

Now, what?

Now, IEC 62304:2006 is getting old,

Now, ISO 13485:2003 is withdrawn,

Now, the new regulations 2017/745 and 2017/746 are there,

Now, the European Commission should do their homework.

100% probability of software failure in IEC 62304 Amd1 2015

A reader of the post on IEC 62304 Amd1 2015 noticed in the comments that the sentence in section 4.3.a was removed:

If the HAZARD could arise from a failure of the SOFTWARE SYSTEM to behave as specified, the probability of such failure shall be assumed to be 100 percent.

Don't be too quick to scratch the 100 percent thing!

The dreadful 100 percent is still present in the informative Annex B.4.3.

Even if it is no more in the normative part, you shall continue to bear in mind this assumption when assessing software risks. The underlying concept is that it's not possible to assess probability of software failure, thus the worst case shall be considered.

This is the state-of-the-art, present in ISO 14971, in IEC 80002-1, in IEC 62304, and in the FDA Guidance for the Content of Premarket Submissions for Software Contained in Medical Devices.

100% probability is not dead!

Happy New Year 2018

Consequences of the 21st Century Cures Act - State of Play

Since the last blog post on US FDA guidance on software classification, things evolved quickly with the FDA. We know where they want to go with software as medical device, but not exactly how they will implement it.

Let's do a review of what has been done since the publication of the 21st Century Cures Act.

21st Century Cures Act in December 2016

To begin, we've had the 21st Century Cures Act, with Section 3060 "Clarifying medical software regulation". This is the top-level document as it is regulation voted by the Congress in December 2016.

This Act was published after guidances on general wellness, mobile medical apps, and PACS and MDDS had been published in 2016. They are in final status, but when you download these guidances, the first page contains now an additional cover page stating that these guidances have to be revised.

Guidance on Section 3060 of the 21st Century Cures Act

To do so, the FDA published in December 2017 a draft guidance titled "Changes to Existing Medical Software Policies Resulting from Section 3060 of the 21st Century Cures Act". This is a guidance on how other guidances will be revised (you follow me?). We call it Section 3060 guidance in this article.

This guidance is organized by software functions, rather than types of platforms. This is to stress that software shall be qualified as medical device, based on its functions, not the platform. For example, the same function present in an mobile app or a web site will have the same regulatory status.

The Section 3060 guidance is good news, many software functions are removed or are confirmed to be outside the scope of medical device definition. The functions below are NOT medical devices:

- Laboratory Information Management Software (LIMS),

- Electronic Patient Records (EHR) management systems,

- Patient Health Records (PHR) management systems,

- Medical Image Storage systems, such as Radiological Information System (RIS),

- Medical Image Communication systems, and

- Medical Device Data Systems (MDDS),

Provided that they don't offer functions in the scope of medical device definition, such as aid to diagnosis.

Note also that there is a footnote on Medical Image Communication devices in the guidance. Some devices will continue to be regulated as medical devices.

The Section 3060 guidance is also good news for software generating notifications and flags when a value is outside a range, like telemedicine or remote surveillance applications. Provided that these notifications don't require immediate clinical action, the FDA doesn't intend to enforce regulations.

Usually, when immediate clinical action is required, such software functions are named alarms and active patient monitoring.

The Section 3060 guidance is finally good news for a subset of wellness devices in the grey zone: those with functions for the management of a healthy lifestyle, while addressing chronic diseases or conditions. The FDA doesn't intend to enforce regulation on such devices either.

Comparison with European guidance

The Section 3060 guidance contains a section on software functions for transferring, storing, converting formats, displaying data and results. The wording used to name these functions is close to the one used in the European guidance MEDDEV 2.1/6 of July 2016.

The MEDDEV uses the terms storage, archival, communication, simple search or lossless compression. This is equivalent to transferring, storing, converting formats in the FDA guidance. The FDA uses converting formats, which has a broader meaning that lossless compression in MEDDEV guidance. This can be interpreted as: the medical device definition in European regulations exclude medical data conversion unless information is lost during conversion.

For the wording displaying data and results present in the Section 3060 guidance, there is the same approach in the Meddev guidance: as long as software is not used for diagnosis or treatment, such as functions displaying data to a technician, to verify that data are present, it is not a medical device.

Thus, we have a kind of convergence between the FDA guidance and the European guidance on qualification of software as medical device.

Work in progress

This guidance is still work in progress. First, it is a draft guidance. Second, the guidance refers to other guidances work in progress:

- The draft guidance on Clinical Decision Support Software (will be the subject of a future blog post),

- A future guidance on multi-functionality software combining medical device and non-medical device functions (there's an old post on this subject),

- A future guidance on Medical Image Communication systems.

The section 3060 guidance is really good news for many software editors in the field of medical data management on the one hand, and wellness or healthy lifestyle on the other hand. Even if it is a draft guidance, things shouldn't radically change in the final version. The 21st Century Cures Act doesn't give much space to interpretation.

FDA Guidance on Medical Device Accessories updated

Here is a quick follow-up of the new version of the FDA Guidance titled Medical Device Accessories – Describing Accessories and Classification Pathways, published in December 2017. This comes a bit in parallel to the Section 3060 guidance described in the previous post on the 21st Century Cures Act.

The previous version of this guidance had been published in January 2017.

First remark, the FDA didn't include the changes of the accessories guidance in the Section 3060 guidance. This is understandable, as the final version of the accessories guidance had been published in January 2017 after the 21st Century Cures Act.

Second remark, the major update of the accessories guidance is the addition of a new section on Accessory Classification Process. Its stems from the FDA Reauthorization Act of August 2017, posterior to the 21st Century Cures Act. We find in this section the provisions of the section 707 of the Reauthorization Act about accessories.

Optional accessories

One tiny change in the text of the guidance is the inclusion of optional articles in the definition of accessory.

An article intended to be used with a parent medical device and labelled as optional is an accessory.

Accessory classification process

The main change is the addition of the section named "Accessory Classification Process".

It makes the distinction between:

- New accessory types,

- Existing accessory types;

- New accessory types through the De Novo process.

The purpose of the section is to explain how to request the FDA to classify an accessory parent of a device when the device is submitted in a 510k, a PMA or a De Novo process. The guidance clarifies the possibilities to put accessories in a class different from the parent device, with a risk-based approach.

Software As a Medical Device (SaMD) accessories

For Software As a Medical Device (SaMD), this guidance doesn't change much of the regulatory landscape. The recommendations of the guidance don't change about what kind of software should be deemed an accessory.

The following sentence, present in the version of January 2017 of the guidance, remains in this new version:

FDA intends for the risk- and regulatory control-based classification paradigm discussed in this guidance to apply to all software products that meet the definition of an accessory, including those that may also meet the definition of Software as a Medical Device (SaMD).

We now know with this new version of the guidance that we have these options to classify a SaMD, which comes as an accessory to a medical device, in a class different from the parent device.

Update of SRS and SAD templates for GDPR

European Regulation 2016/679, aka GDPR, will be fully in force in May 2018. Everybody knowns that we have something to do to be compliant since it has been published. And everybody is getting awake only two months before the full application. So do I.

GDPR has a global impact on organizations managing personal data, and personal health data. The processes, software and IT infrastructure of the organization have to be reviewed and reworked to be compliant to GDPR. The purpose of this blog is on software, thus we restrict the view to software design only.

The impact on software medical devices is primarily in the software requirements, and by extension in the software architecture. Let's see why.

GDPR requirements for manufacturers of software medical devices

Software medical devices are either sold embedded in physical medical devices, or deployed to the premises of the end-users, or used in remote mode, like Saas or Cloud. In either cases, the manufacturer isn't the Controller (article 27) of personal data, excepted rare cases. The manufacturer can be the Processor (article 28) of personal data, when the software device is used in remote mode by the healthcare provider.

Being manufacturer of software medical device and Processor implies a lot of requirements to this person. Again, we won't discuss that here, this is more by contract and organization that compliance to GDPR requirements applicable to Processors can be demonstrated.

Excepted very rare cases, the Controller of personal data is the healthcare provider. Thus, manufacturers of software medical devices have to design and deliver software, with the right functionalities, which will help the Controller to be compliant to GDPR.

Impact of GDPR Requirements on software requirements

In all the requirements of the GDPR, we can isolate a subset of requirements with an impact on software functionalities. The following additional functions may be required, depending on the device intended use:

| GDPR requirements | Software functionalities |

| Article 15 Right of access by the data subject | A function, which outputs the items of the bullet 1. A function to export data The controller shall provide a copy of the personal data undergoing processing. |

| Article 16 Right to rectification | Functions to update data upon request, with a report of update. |

| Article 17 Right to erasure (‘right to be forgotten’) | Functions to permanently delete data, with a report of deletion. |

| Article 18 Right to restriction of processing | Functions to set some processing parameters to on or off, depending on the restriction. |

| Article 20 Right to data portability | Functions to export data in a common format (which one? good question!) |

| Article 21 Right to object | Functions to set some processing parameters to on or off, depending on the objection |

| Article 22 Automated individual decision-making, including profiling | Functions to set some processing parameters to on or off, depending on the request |

The use of such functions should be the end of an administrative process initiated by the person subject of personal data. Thus, these functionalities are more prone to be used by administrators or data managers than by end-users with non-administrative profiles. Likewise, not all functions shall be present in software compliant to GDPR. The requirements mentioned above can also be handled by manual administrative processes.

Impact of GDPR requirements on software architecture

In the same spirit, we have two articles with an impact on the architecture design:

| GDPR requirement | Software architecture |

| Article 25 Data protection by design and by default | The architecture shall be designed in a way to: implement appropriate technical and organisational measures, such as pseudonymisation, which are designed to implement data-protection principles integrate the necessary safeguards into the processing in order to meet the requirements of this Regulation and protect the rights of data subjects only personal data which are necessary for each specific purpose of the processing are processed by default personal data are not made accessible without the individual's intervention to an indefinite number of natural persons |

| Article 32 Security of processing | The architecture shall implement appropriate measures, commensurate to the risks on personal data: (a) the pseudonymisation and encryption of personal data; (b) the ability to ensure the ongoing confidentiality, integrity, availability and resilience of processing systems and services; (c) the ability to restore the availability and access to personal data in a timely manner in the event of a physical or technical incident; (d) a process for regularly testing, assessing and evaluating the effectiveness of technical and organisational measures for ensuring the security of the processing |

These requirements are consistent with other risk-based approaches, like the requirements on risks in the Medical Device Regulation 2017/745 Annex I, chapter I, bullet 4 (a): eliminate or reduce risks as far as possible through safe design and manufacture, and ISO 14971 section 6.2 Safety inherent by design. We come back here to the previous articles discussing cybersecurity in medical devices.

The architectural design is strongly influenced by the risk based approach. A Privacy Impact Assessment (PIA) has to be conducted by the Controlled of data. To help the Controllers manage their PIA, the software manufacturer can anticipate this assessment by performing a risk assessment on its own, and implement mitigation actions, which will be referenced in the PIA, as appropriate.

Templates SRS and SAD

As a consequence of these new requirements, the templates on SRS and SAD have been updated. new sections on GDPR, and by extension HIPAA, have been added:

- Download Software Requirements Specifications template,

- Download System Architecture Document template.

You will also find them in the templates repository page.

I share this template with the conditions of CC-BY-NC-ND license.

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivs 3.0 France License.

IEC 62366-1 and Usability engineering for software

Usability is a requirement, which has been present in regulations since a long time. It stems from the assessment of user error as a hazardous situation. It is supported by the publication AAMI HE75 standard, FDA guidances, and the publication of IEC 62366 in 2008 followed by IEC 62366-1:2015. Although usability engineering is a requirement for the design of medical devices, most of people designing software are not familiar with this process. This article is an application of the process described in IEC 62366-1 to software design.

Before applying this without critical thinking, please take note that what is described below may not be enough for cases where use errors can have severe consequences, e.g. devices intended to be sold to end-users directly. In such cases, requesting the services of specialists in human factors engineering is probably the best solution.

Usability engineering plan

Write what you do, do what you write. The story begins with a plan, as usual in the quality world. The usability engineering plan shall describe the process and provisions put in place. For standalone software, this process lives in parallel to the software design process. The usability engineering plan can be a section of the software development plan, or a separated document. The usability engineering plan describes the following topics:

- Input data review,

- Definition of use specification,

- Link with risk management,

- User interface specification,

- Formative evaluation protocol,

- Formative evaluation report,

- Design review(s),

- Summative evaluation protocol,

- Summative evaluation report,

- Usability validation.

Note: you can use the structure and content below in this article to write your own usability engineering plan (if you can afford not to pay for usability engineering specialists :-)).

Usability input data

Usability input data is a subset of design input data. They are gathered before or at the beginning of the design and development project. Depending on the context of the project, they can contain:

- Statements of work,

- User requirements collected by sales personnel, product managers …,

- Data from previous projects,

- Feedback from users on previous versions of medical devices,

- Documentation on similar medical devices,

- Specific standards, like IEC 60601-1-8 Home use of electromedical devices,

- Regulatory requirements, like IFU or labeling.

Usability input data are reviewed along with other design input data. So, you should include these data in your design input data review.

Preparing the use specification

The use specification is a high-level statement, which contains information necessary to identify:

- the user groups which are going to be subject of the usability engineering process,

- the use environment in which the device is going to be used,

- the medical indications which are needed to be explored further.

The use specification shall include the:

- Intended medical indication;

- Intended patient population;

- Intended part of the body or type of tissue applied to or interacted with;

- Intended user profiles;

- Use environment; and

- Operating principle.

Preparing the use specification can make use of various methods, for example:

- Contextual enquiries in the user's workplace,

- Interview and survey techniques,

- Expert reviews,

- Advisory panel reviews.

Usually, the use specification is prepared with expert reviews. This method is the simplest to implement (once again if you can afford not to use other methods :-))

The use specification is recorded in the usability management file.

Analysis

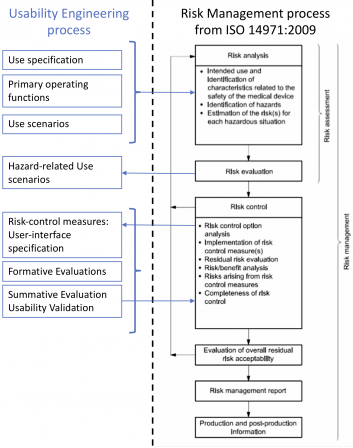

The usability engineering process is performed in parallel to the ISO 14971 risk management process.

Below is a diagram showing the links between the risk management process and the usability engineering process. This diagram is non-exhaustive and for clarification purposes only.

I didn’t represent the software development process on this diagram. This is not the purpose of this article to show the relationships between software development and risk management. Please, have a look at this post on ISO 14971 if you’re keen at refreshing your memory on software development.

I didn’t represent the software development process on this diagram. This is not the purpose of this article to show the relationships between software development and risk management. Please, have a look at this post on ISO 14971 if you’re keen at refreshing your memory on software development.

Identifying characteristics for safety

This step sounds clearly like risk management. It consists in identifying:

- The primary operating functions in the device,

- The use scenarios,

- The possible use errors.

In a first approach, you can answer the questions in ISO 14971 annex C to identify characteristics to safety. If the man software interaction is prone to be a source of critical hazardous situations, more advanced methods may be required.

These data (primary operating functions, use scenarios and possible user errors) are recorded in the usability management file.

For software, the primary operating functions and use scenarios can be modelled with use-case diagrams and descriptions. Note that UML requires that a use-case diagram contains a text description of the use-cases.

Identifying hazardous phenomena and hazardous situations

This step consists in identifying the hazardous phenomena and hazardous situations (ditto). This is classical risk assessment. They are identified with data coming from:

- The use specification,

- Data from comparable devices or previous generations of the device,

- User errors identified in the previous step.

These elements are documented in the risk management file. They can be placed in a section specific to human factors.

Examples of user-related hazardous phenomena and situations:

- A warning is displayed, the user doesn’t see it,

- A value is out of bounds, the user doesn’t see it,

- The GUI is ill-formed, the user doesn’t understand it.

Identifying and describing hazard-related use scenarios

This step is once again risk analysis: the hazardous phenomena, the sequence of events, and the hazards, resulting of the human factors are identified. These elements are documented in the risk management file accordingly.

Selecting hazards-related scenarios for summative evaluation

It is not required to submit all hazard-related scenarios to the summative evaluation. It is possible to select a subset of these scenarios based on objective criteria.

Usually, the criteria is Select hazard-related scenarios where the severity is higher than a given threshold e.g.: Severity ≥ Moderate. You shall write your own criteria in the usability plan.

Said the other way round, it’s not worth including scenarios with low risks in the summative evaluation.

Identifying mitigation actions and documenting the user interface specification

The risks related to the use scenarios are then evaluated according the risk management plan (severity, frequency, and possibly detectability if you included that parameter in you risk management plan), and mitigation actions are identified, by following the risk management process.

Identification of mitigation actions can be done either before or during the formative evaluations. It is necessary to confirm the validity of the mitigation actions during the formative evaluations.

The mitigation actions are documented in the user interface specification, in order of priority (see 6.2 of ISO 14971):

- Changes in user-interface design, including warnings like message boxes,

- Training to users,

- Information in the accompanying documents: IFU and labeling.

For software, the user interface specification can be included in the software requirement specification.

Note that warnings in the graphical user-interface can be seen as design change, and not information to the user. But this shall be verified in the summative evaluation. The warning message shall be relevant enough, placed at the right step in the workflow and change the user’s mindset to avoid an hazardous situation.

Design and Formative Evaluation

The formative evaluation is performed during the design phase. You can have one or more formative evaluations. The sequence of formative evaluations in the design project depends on the software being designed. There is no canonical sequence of formative evaluations. At least one formative evaluation is required, though this could be a bit too short. Two formative evaluations sound like a good fit. The points not identified or discussed in the first evaluation can be treated in the second evaluation.

To be relevant with the design and development project, the formative evaluations should be placed before the last design review. Thus, the design and the user interface are “frozen” after the design review. This doesn’t mean that the formative evaluation happens during the design review.

The formative evaluation can be done with or without the contribution of end-users. The methods of evaluation depend on the context: questionnaires, interviews, presentations of mock-ups, observation of use of prototypes.

For software, the commonly adopted solution is the presentation of mock-ups or prototypes, with end-user proxies (like product managers, biomedical engineers) and end-users who can “play” with the mockups. It is also a good option to let the end-user proxies review the mock-ups to “debug” them, before presenting them to real end-users. So that the presentation doesn’t deviate (too much) with wacky requests from end-users.

Summative evaluation

The summative evaluation is performed at the end of the design phase. It can be done after the verification, or during the validation of the device or, if relevant or possible, during clinical assays. It aims at bringing evidence that the risks related to human factors are mitigated. The position of the summative evaluation depends on the context of your project.

The summative evaluation shall be done with a population of end-users statistically significant for the evaluation. E.g. at least 5 users of each profile defined in the use specification (see FDA guidance documents on Human Factors Engineering). The summative evaluation shall be done for every scenario selected according to criteria defined above (e.g. severity ≥ moderate). The methods of evaluation are left to your choice, depending on the context e.g.: use in simulated environment, use in the target environment.

If the data collected during the summative evaluation don’t allow to conclude on the proper effectiveness of the mitigation actions, or if new risks are identified, you shall either redo the usability engineering process iteratively, or bring rationale on the acceptability of the residual risks individually and on the overall residual risk acceptability. The rationale can be sought in the risk/benefit ratio on the use of your device.

For software, the solution commonly adopted is free tests performed by selected end-users on a beta version or a release candidate version. The summative evaluation can end with the analysis of a questionnaire filled by the selected end-users.

The user interface of the device is deemed validated when the conclusion of the summative evaluation is positive. You’re done, good job!

Application to agile methods

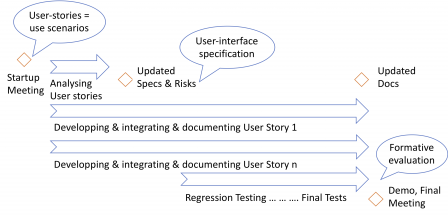

The steps described above can be disseminated in the increments of an agile development process.

Use specification, primary operating functions

Use specification and primary operating functions are usually defined in the initialization/inception phase of the project. This phase sets the ground of the software functions and architecture. Likewise, the use specification and primary operating functions are defined during this phase.

Depending on how much you know about the software being developed, the initialization can also be the right time to write the use scenarios. If you already know, say, 80% of the user requirements, you can write the use scenarios and make the risk assessment on these scenarios at the beginning of the project. If you don’t know much on your future software, the use scenarios have to be defined/updated during the iterations.

Iterations and usability engineering

The next steps of the usability engineering process are performed during iterations, as shown in the following diagram and explained in the next subsections.

Use scenarios and hazards mitigation

The “objects” you manipulate during the iterations are epics and user-stories. They can be seen as use scenarios or small chunks of use scenarios, depending on their size. Thus, you can use them to identify hazards related to user-errors, identify mitigation actions, and update the user-interface specification accordingly.

Depending on the items present in the backlog (eg a brand-new use scenario), it is also possible that you have to update the use specification and the list of primary operating functions, during an iteration.

Formative evaluations

Agile methods usually define “personas”, which represent the user-profiles, and are used by the software development team to understand the behavior of the users.

You may base your formative evaluation on the use of these personas. With the role of end-user proxy for the team, the product owner is responsible for the formative evaluation. He/she does the formative evaluation of the user-stories. He/she may invite another person external to the team (or to the company) to participate to the formative evaluation.

You can do the formative evaluation during the demonstration of the software at the end of the iteration. Depending on the results of the formative evaluation, new items related to the user-interface may be added to the backlog and implemented in a further iteration.

Summative evaluation

The summative evaluation is placed after the verification phase of the agile software development process. It is performed as described in the “Summative Evaluation” section above.

Incremental summative evaluation may be performed with intermediate releases. I don’t recommend that method. The user-interface is subject to changes in a further intermediate release, invalidating the conclusions of an incremental summative evaluation.

A way to see things is to say that summative evaluation isn’t something agile.

Conclusion

I hope you have a better understanding on how to implement IEC 62366-1:2015 in you software development process. Remember that I'm in software above all, human factors engineering isn't my background.

Congratulations and hate comments are welcome!

Cybersecurity - Part 5 Templates

Hi there! Long time no see once again. I dig up our series of posts on cybersecurity.

In this post I publish two new templates for cybersecurity risk management.

The list of standards and guidances dealing with cybersecurity in medical devices has evolved a lot for the last two years:

- At first we had the FDA guidances on cybersecurity published in 2014-2016,

- Then AAMI TIR 57 was released in 2016, it describes a security risk management process comparable to the safety risk management process of ISO 14971,

- And in 2017, ANSI/UL 2900-1, and ANSI/UL 2900-2-x were released, they list requirements (sometimes very specific) to design and maintain a secure medical device.

Note that a traceability exists between UL 2900-x standards and FDA guidances on cybersecurity. I think though, it's unofficial, I let you find it on the web.

All these guidances and standards represent a good source of information to implement a cybersecurity risk management process. However, if we extend the scope to other industries, the risk management process described in ISO 27005 is also a good start.

This is the approach I had in the past few years, begin with ISO 27005 process and add specific medical devices provisions based on recommendations from AAMI TIR 57 and requirements from ISO 14971.

This risk management process is then fed with guidances found in informative part of ISO 27005, AAMI TIR 57, as well as provisions found in UL 2900-x.

The two templates are based on this approach:

You will also find them in the templates repository page.

I share this template with the conditions of CC-BY-NC-ND license.

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivs 3.0 France License.

Cybersecurity - Draft guidances form FDA and Health Canada

The US FDA published in October 2018 a new draft version of its guidance on the content of premarket submissions for management of cybersecurity in medical devices. Two months later, Health Canada published in December 2018 a draft guidance document on pre-market requirements for medical device cybersecurity.

We are surrounded by draft guidances!

US FDA Draft Guidance

To refresh your memory, this guidance defines the documentation to compile about cybersecurity for a premarket submission. The new draft guidance brings three significant changes, compared to the previous guidance of 2014:

- The concept of trustworthy device,

- A categorization of software: tier 1 and tier 2,

- A list of recommended mitigation actions by design.

Like the previous approved version, this draft guidance relies on the NIST Cybersecurity Framework to manage cybersecurity:

- Identify, protect,

- Detect,

- Respond,

- Recover.

That's a good thing, as this framework is freely and globally available on the NIST website for all medical devices manufacturers around the world. Free documentation is a good option when you want to promote security and protection from criminal organizations!

Trustworthy device

Cybersecurity introduces lots of new concepts for people who never rubbed their skin to cybersecurity. To make a parallel with patient safety, the concept of trustworthy device (you will find the definition in the guidance) is equivalent to the concept of validated device in terms of safety and clinical performance.

If a device is clinically validated, you trust it for patient management. If a device is trustworthy in the meaning of this guidance, you trust it for cybersecurity protection.

Tier 1 and Tier 2 devices

The really good news is the introduction of a hint of explicit risk-based approach in this guidance. It defines two categories:

- Tier 1: Higher Cybersecurity Risk,

- Tier 2: Standard Cybersecurity Risk.

The level of evidence to bring to the FDA, to demonstrate that your device is a trustworthy one, is higher for tier 1 (full documentation) and lower for tier 2 (rationale-based partial documentation).

Thus, introducing cybersecurity risk assessment in your risk management process is a good idea.

List of recommended mitigation actions by design

The other good news is the clarification of FDA's expectations on design for cybersecurity. The guidance lists no less than 37 design measures for cybersecurity management. It is striking that these measures are numerous and precise. There is probably a will of the FDA to promote the design of secure devices by directly pointing to the relevant measures.

The drawback of this list is to limit or focus on a subset of measures, drawing the attention of the reader to these measures only. (I had to find a drawback, no ?)

It is also worth noting that most of the recommendations can be found in the UL 2900-1 standard (to be reviewed in a next post).

Labeling recommendations

To end this quick tour of this guidance, just two remarks on labeling recommendations.

Information on cybersecurity have to be disclosed to end-users. This is a basic principle of cybersecurity management that you will find in other documents like ISO 2700X standard family. The FDA follows this principle in their labeling recommendations.

And I can't help but finish by this last comment on Cybersecurity Bill Of Material (CBOM). CBOM is the list of all off-the shelf software that are or could become susceptible of vulnerabilities. The CBOM shall be cross referenced with the National Vulnerability Database (NVD) or similar known vulnerability database.

Do you like IEC 62304 requirements on SOUP periodic review? Trust me, you are going to love CBOM - NVD cross-reference!!!

Health Canada FDA Draft Guidance

Like the US FDA guidance, this guidance gives recommendations on documentation to provide with medical device licence submission.

The guidance recognizes that a cybersecurity strategy shall be defined for devices incorporating software, from class I to class IV. This strategy should include:

- Secure design

- Risk management

- Verification and validation testing

- Planning for continued monitoring of and response to emerging risks and threats

Unlike the US FDA Tier 1 and 2 categories, the full Canadian guidance is applicable to all regulatory classes. however, the documentation is only reviewed by Health Canada for class III and class IV medical device licence applications. Class I a simple registration, and class II doesn't require to submit the design dossier.

NIST Cybersecurity Framework

Like the US FDA guidance, the Canadian guidance relies on the NIST framework for cybersecurity management. Yes, you read it right, Canada references a document available on nist.gov website.

Cybersecurity measures

Like the US FDA guidance, the Canadian one gives a list of design measures to envision: no less than 17 measures are found in tables 1 and 3. It is worth noting that some of these measures come from UL 2900-1, referenced by the guidance.

Safety risk vs security risk

This draft guidance references AAMI TIR 57. It also contains screen shots (figure 2) of AAMI TIR 57 on the relationship between safety risk management and cybersecurity risk management.

The table 2 gives Examples of the relationship between cybersecurity risk management and patient safety management. Beware if you read this table: you have to understand that each line is a chunk of a risk management process. E.g. the line Security risk with a safety impact contains Not applicable for Security Control, hence the line focusses on the risk, not the control.

Medical Device Licence Application

All in all, the main goal of this guidance is to give recommendations on the content of a medical device licence application for class III and IV devices, also applicable to class II and class I design files. It embraces the state-of-the-art in this discipline and defines how it should be documented.

UL 2900-1 and AAMI TIR 57

On the normative side, the Canadian guidance references a few standards and guidances. We find AAMI TIR 57. It gives very good hints on the relationship between security risk and safety risk management.

We have also UL 2900-1, the one and only standard addressing cybersecurity in medical devices design, up to now. UL 2900-1 overlaps partially with the two draft guidances. All design measures found in the guidances are found in UL 2900-1. Thus UL 2900-1 sets a common ground for the design on medical devices.

We will see that in the next post.

BTW, IEC 62304 and ISO 14971 are also referenced. Like everyday life!

Conclusion

North American agencies are strengthening their expectations on cybersecurity in regulatory submissions. Health Canada is the newcomer, with their guidance copyright Her Majesty the Queen in Right of Canada. (Who is also Her Majesty the Queen of the UK!).

Hey European Commission, where is your guidance to answer to General Requirements #17 and #23.ab in Annex 1 of regulation 2017/745/UE?

When the ANSM French Authority update their website without notice

To be software as a medical device or not to be.

That is the question.

And you rely on Competent Authorities to determine whether software is a medical device or not.

For example, you have some practical examples on the ANSM website (google translate is my Friend): here is the lastest version.

And you wish you had a way to be noticed by the ANSM when the page changes. Hell no, no way.

Especially you wish you had been noticed when the ANSM change their mind on telesurveillance software: here is the previous version on archive.org if you want to compare with the latest.

Before: examples showing that communicating data, like tele follow-up is not a MD, with the invocation of expert function (found in MEDDEV 2.1/6 only for qualification of IVD, not MD) to exclude software without such function.

After: examples showing that tele surveillance is a MD (exit the expert function).

Arrgghh, This isn't Good Regulatory Practice.

MDR: one year left and too late for class I software

Today is the 26th of May 2019. Rings a bell? In one year exactly, your class I MD software will be living in borrowed time on the EU market.

Why?

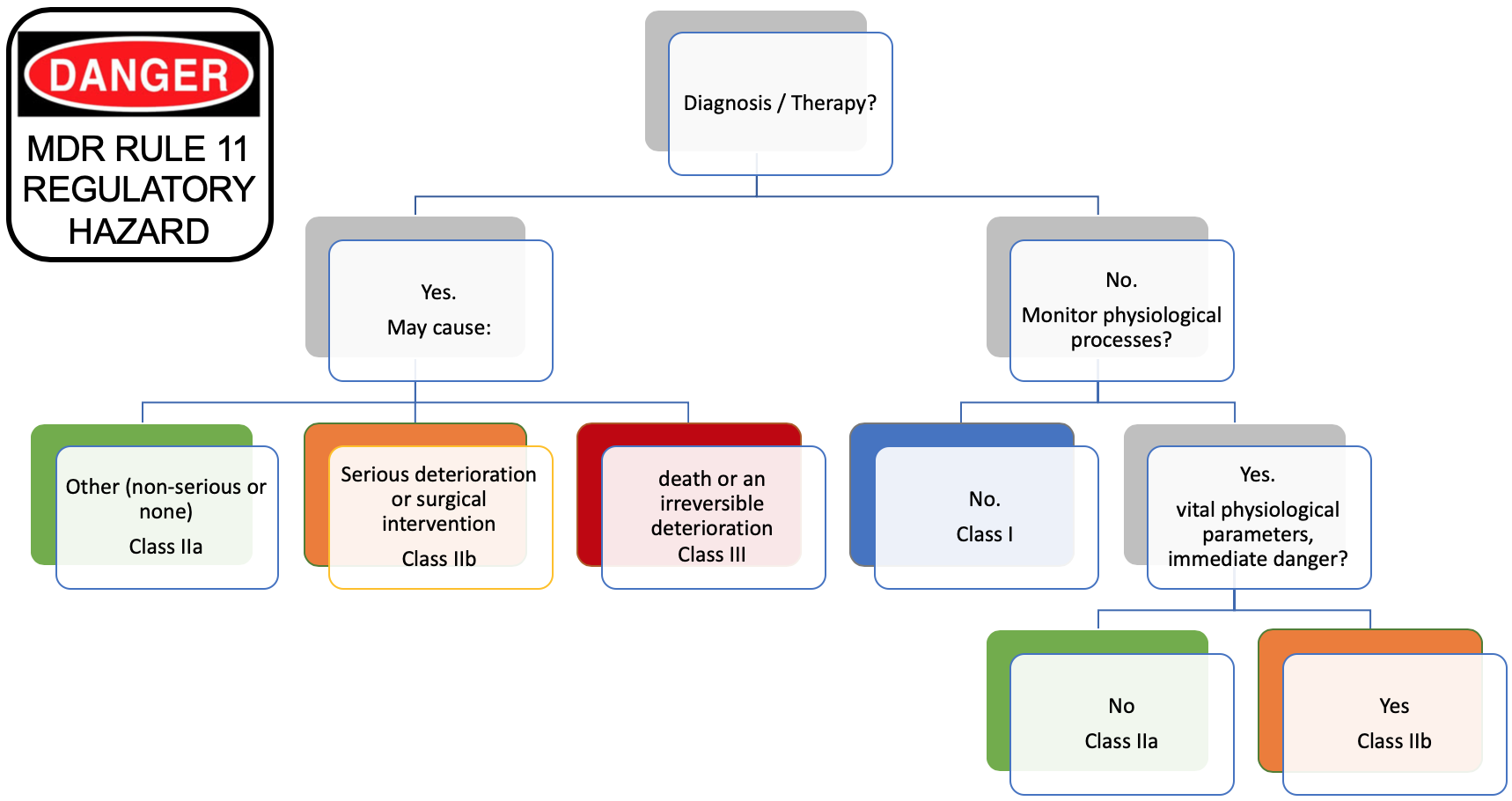

Because of rule 11 of 2017/745/UE Medical Device Regulation (MDR).

As mentioned in a previous article on MDR, the rule 11 impacts all manufacturers of software medical devices. Another terrible news, the rule is applicable to all software whatsoever. Standalone or embedded. For, the rule 11 doesn't contain the word "standalone" or "embedded"

In this context, I can assert that a majority of active devices will be at least in class IIa ; and most of standalone software, if not 99%, will be in class IIa.

Why?

Because of rule 11 of MDR.

The rogue, the villainous rule 11.

Class IIa for everybody

Definition of medical device

First, for software, the definition of a medical device didn't change between the directive and the MDR. Some words like "prediction, prognosis" were added, which clarify the definition. But these are minor changes. So, if your software is a medical device with the 93/42/CE Medical Device Directive (MDD), it is a medical device with the MDR.

Qualification of medical device

Software embedded in a hardware medical device is a medical device. Simple case. Standalone software is more subject to interpretations. i.e. Software running on non-dedicated hardware, like PC, tablet, smartphone etc.

Now that the European Court of Justice set a legal precedent with the MEDDEV 2.1/6, we can officially use the decision tree #1 in this MEDDEV to determine whether standalone software (aka Software as Medical Device, SaMD) is a medical device or not.

Using this workflow, if we have the three conditions:

- Software performing functions other than storage, communication and "simple search" (simple search has a very broad interpretation, have a look at the manual on borderline classification),

- Software delivering new information, based on individual patient's data, and

- The manufacturer (you) claiming that this new information is for diagnosis or treatment purposes,

Then, this software is a medical device.

To make it short:

If software for diagnosis or treatment purposes then medical device.

Note: I put apart the cases of accessories and implementing rules, extensive interpretation of "simple search" of MEDDEV 2.1/6 or manual on borderline classification.

Classification of medical device

With the MDD, most of software are in class I, unless you're unlucky to fall in a higher class to per MEDDEV 2.4/1 rev.9 or the Manual on Borderline Classification.

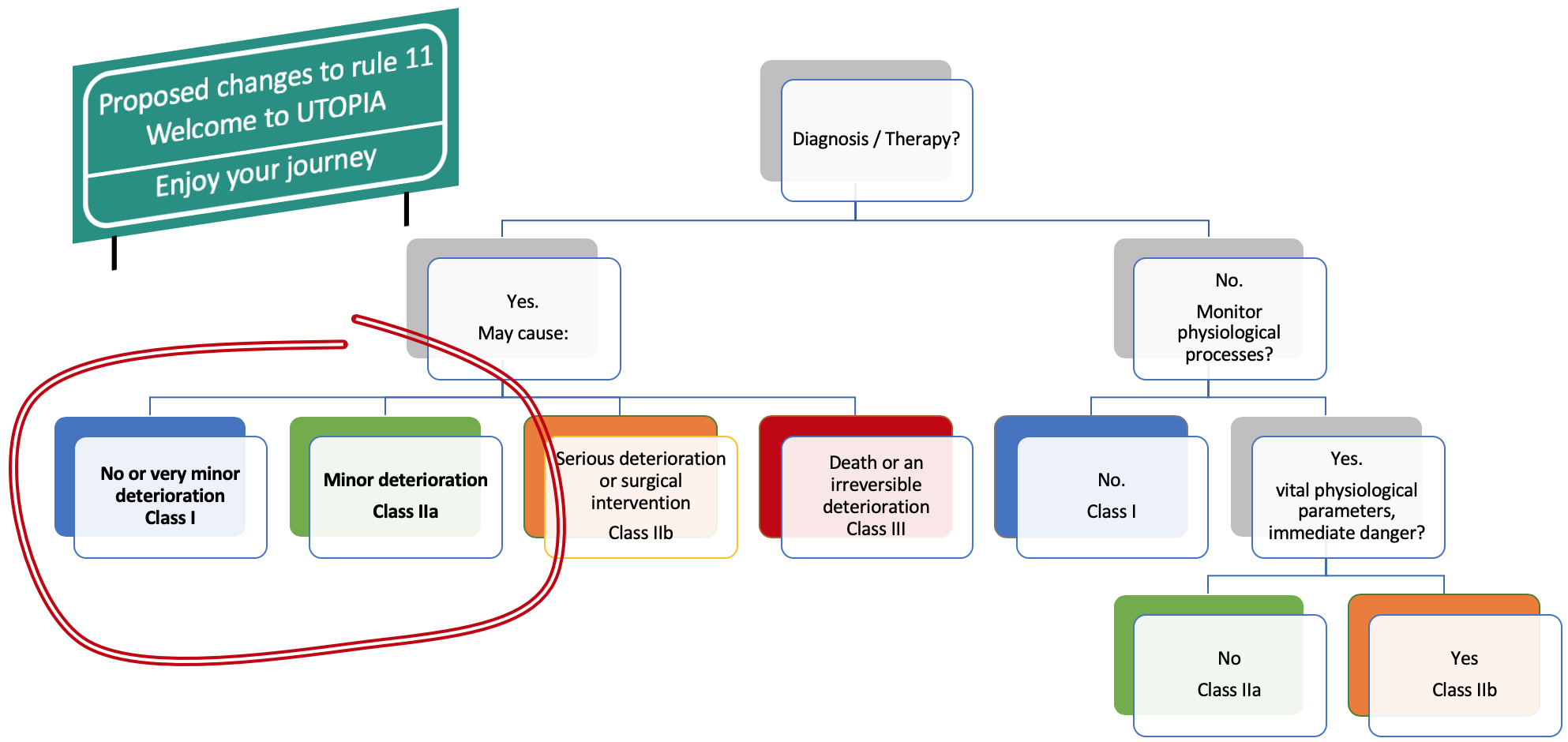

With the new rule 11 of the MDR, things get harder. Standalone or embedded, apply rule 11 if your medical device contains software.

The tree below shows the rule in a graphical representation:

We can see that Software intended to provide information which is used to take decisions with diagnosis or therapeutic purposes is in class IIa or higher (left part of the tree).

But, we know per MEDDEV 2.1/6 that standalone software is qualified of medical device if the manufacturer claims that the intended use is for diagnosis or treatment.

Thus, standalone software qualified of medical device is automatically classified in class IIa or higher. More, this rule is applicable to standalone software and embedded software, like IOT, and connected widgets of all sorts that blossomed the last 3-4 years.

To make it short:

If software in class I with MDD then software in class IIa with MDR.

Note: I put apart the cases of accessories, implementing rules, monitoring, and some residual cases of SaMD not for diagnosis or treatment purposes.

Rewriting rule 11?

We were told in our management courses that mourning and yelling isn't a good thing. Let's try being positive, and rewrite the rule 11 in a way more consistent with a risk-based approach!

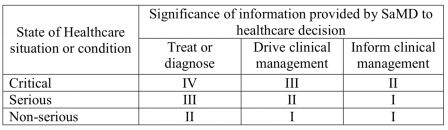

We can get inspiration from the IMDRF SaMD Risk Categorization Framework. The table below comes from the IMDRF document:

This table shows that software driving clinical management (namely aid to diagnosis) for non-serious diseases is in class I. Thus, there is a consensus at the IMDRF level that an intended use claiming an aid to diagnosis on a non-serious disease can be in IMDRF category I (equivalent of EU regulatory class I).

In the examples of the IMDRF document, we find in category I (class I):

SaMD that analyzes images, movement of the eye or other information to guide next diagnostic action of astigmatism.

If this is not an aid to diagnosis, I don't know what it is.

Taking this information, we could change the rule 11 to align the regulatory classes with the consequences for the patient of a software failure. Here is the result of the modified rule 11 (wording to be improved, just food for thought):

The changes proposed to rule 11 are pure speculation or utopia. Alas, we must live with the dystopian rule 11. So, what can we do?

Action plan

You have software in class I and the medical device directive hasn't been a big deal for you so far? Here are some possible actions.

Stay away, ever!

Claiming that your product is a medical device is a marketing argument? Stay away! Don't listen to marketing departments, who want the CE mark on the device because competitors blah blah. They hardly know the consequences.

Stop a product line with medical intended use. Or carefully carve your intended use to stay outside the definition of medical device. Stay inside the limits of storage, communication, and simple search. Don't claim a medical device intended use.

Stay away, as long as possible!

Postpone new products with medical intended use to 2021 or later. Wait for the dust to fall.

Go to the American market first!

Prepare the transition, now!

If your devices are definitely medical devices, and the EU market is strategic, then you have no choice. You must plan a transition to the EU MDR. If you read these lines and have been in the MD industry for long, I suppose that you've already set up (or thought to set up) an action plan, and that you shudder at the thought of even finding a Notified Body.

If you are new to the MD industry, don't think about a transition unless you have a very good reason to do so. Go back to the first two actions, and consider seriously other markets, like the USA. I'm not happy to write that.

Costs and schedule

I can throw a few figures to give you an idea of the immensity of the task:

- 100 kEuros (or 100 kDollars, or 6.02E23 Imperial Credits) to prepare your Class IIa MDR submission,

- In the worst case, add a clinical investigation,

- At least one year to get the CE certificate,

- At least one person full-time managing the CE marking project,

- Changes you can't imagine in the design of your software,

- About 50-100 documents to write and review, and maintain in the long run,

- A PMS and PMCF to set up (If you don't know what a PMS and PMCF are: go back to the first two possible actions).

You have an idea of the order of magnitude of the human and financial effort.

Too late for the directive

Don't put hope on changing the intended use of your existing device and getting a certification with the MDD in class IIa. The notified bodies don't take new submission with the MDD. They're fully booked. The availability of Notified Bodies is a major bottleneck. Contact me in private message if you know one with spare time!

More, it is a very bad idea to CE mark a SaMD with the MDD. When the MDD expires in May 2020, the design of your device will be frozen. Something totally in contradiction with software lifecycle in general.

A new hope

Let me end, though, on a positive note.

Reading the bare-bone text of the MDR without the right interpretation can lead to exageration. In other words, the 2017/745/UE MDR lacks guidances.

Good news: a software classification guidance, written by the software WG of the CAMD Implementation Taskforce (see their roadmap), will be published by the end of 2019. We have to right to hope that they'll have a relaxed interpretation of rule 11 (no, non, nein, we didn't want to say that!).

Other good news: Notified Bodies are winning their MDR notification, to begin with BSI and TÜV SÜD. Others are in the pipe but the risk of bottleneck is still present.

Anyway, if you can stay away from the MDR and its rule 11, then stay away.

Guidance from GMED Notified Body on significant changes in the framework of article 120 of MDR

The GMED notified body has published a guide on substantial changes: named Guidance document for the interpretation of significant changes in the framework of article 120: “Transitional provisions” of Regulation (EU) 2017/745. This guide addresses changes according to the article 120 of the MDR. It's a decision tree in the same vein as FDA guidances on deciding when to submit a new 510k.

As a manufacturer, your mission, if you accept it, is to answer "No" for all your products CE marked with the MDD.

For hardware, be prepared to place your design in the freezer.

For software, be prepared to place your design in liquid nitrogen.

The guidance is on the page on white papers of GMED website.

Guideline on Cybersecurity from ANSM French Competent Authority

The ANSM French Competent Authority published in July 2019 a draft guideline on cybersecurity for medical devices. The European medical device sector should greatly applaud this initiative. This is the first and only guideline on cybersecurity with regard to the European medical device regulations.

This draft guideline, named ANSM’S guideline - Cybersecurity of medical devices integrating software during their life cycle is available on ANSM's website. The page is in French but an English version of the guideline can be downloaded through a link at the bottom of the page.

A guideline for the European Medical Device Regulation

The introductory text on the ANSM page tell us that:

This is the first time in Europe that recommendations in this area have been developed and the ANSM has shared its work with the European Commission so that the regulations evolve to integrate it.

The objective of this guideline is definitively to meet the General Requirements of the MDR.

But within the French context

The main drawback of this guideline is to be French! Cybersecurity is still new in the world of medical devices, and most of the members of the committee, who wrote this guideline, are French experts in cybersecurity. Thus most of the references in the guideline come from existing guidances and methods published by French public organisations. This is not a blocking situation. The same happens with FDA guidances referencing only US documents.

If you are willing to read the ANSM guideline and if you've already read the FDA guidances on cybersecurity, just replace in your mind the French references by the US ones to get a better view of the French document:

- ANSSI by NIST,

- EBIOS by NIST Cybersecurity Framework,

- Other references like RGS or pdf files on ssi.gouv.fr website, by NIST SP-800 Special Publications found on NIST CSRC website.

Note: ANSSI and NIST are not the same. The equivalent of ANSSI is more the Cybersecurity and Infrastructure Security Agency (CISA) of the DHS. NIST missions are different, but it should work in our little exercise of correspondence.

Guideline recommendations

On the bright side, this guideline brings lots of advantages:

- It is very didactic, about half of the document is made of explanations to let people from the medical device sector understand what cybersecurity is, how it is different form safety risk management, and why a cybersecurity risk management process shall be established with regard to the MDR,

- It draws the link with MDR requirements, but also with GDPR requirements, like the requirement to erase data when decommissioning a device,

- It draws a list of recommendations on what can be done to prevent cybersecurity risks throughout the software lifecycle. This list can be seen as an equivalent of the annex C of ISO 14971:2007, giving hints on what the risk management team should think about.

These recommendations are presented in sections that reflect the software lifecycle:

- Design and development,

- Putting into service,

- Post-market surveillance,

- End of life.

Many manufacturers adopt a presentation of safety risks in their risk assessment report, by order of appearance in the life cycle of the device. The presentation in this guideline will thus ease the understanding and assessment of cyber risks.

More, the recommendations found in the ANSM guideline are complementary with those found in guidances from other regulations. Most of the recommendations in the ANSM guideline aren't present or aren't explained the same way in the draft FDA guidance of October 2018 on Content of Premarket Submissions for Management of Cybersecurity in

Medical Devices, or the Canadian Draft Guidance Document on Pre‐market Requirements for Medical Device Cybersecurity.

Steps to make it EU MDR guideline

A next step would be to promote this guideline at the European level. The French references would need to be replaced by European or international ones, namely:

- At first replacing EBIOS by something like ISO 27005,

- Other references by standards for security applied to health networks of the IEC 80001-1-x series,

- But also references to IEC 62304, IEC 82304 (maybe in the general provisions of software design activities), to enlarge the view to the interactions of the cyber security process with the software development process,

- And why not for medical device verification, references to ANSI UL 2900-x (or IEC equivalent, if SC62A in charge of medical device standards, and TC65 in charge of IEC 62443-x series agree...).

Conclusion

This guideline is worth reading!

Either you aren't familiar with cybersecurity, and the first half of the document will give you a good understanding of the situation. Or you are a bit familiar with cybersecurity, and the document will give you a good list of recommendations to apply throughout the device life cycle.

Cybersecurity in medical devices: a short review of UL 2900-1

We continue this series of articles on cybersecurity with a free and non-exhaustive review of UL 2900-1 standard.

What is UL 2900-1? This standard was published in 2017 by Underwriters Laboratory (UL). It contains technical requirements on cybersecurity for network connectable products. A collateral UL 2900-2-1 focuses on connectable healthcare and wellness systems. UL 2900-1 and UL 2900-2-1 are FDA recognized standards. Thus, applicable to medical devices.

Unlike other guides and standards, e.g. AAMI TIR 57 or ISO 27005 or FDA guidances, this standard doesn't contain requirements on a cybersecurity risk management process. It is more a set of requirements on cyber security measures to document, implement and verify.

However, the section 12 of the standard requires to establish a security risk management process, with some specific requirements, like the use of classification schemes. E.g. CAPEC or CWRAF

Thus, UL 2900-1 cannot be used alone, and you should rely on other standards or guidances to establish a security risk management process, before implementing UL 2900-1 requirements.

Comparison with 60601 standard?

Can we dare a comparison with 60601-1 standard?

IEC 60601-1 Ed3 contains a (huge) set of technical requirements, with a link to safety risk management process. UL 2900-1 contains a set of technical requirements with a link to security risk management process.

Like IEC 60601-1, evidences of compliance to requirements UL 2900-1 will be found in the device design and device verification plans and reports of the Design History File (FDA) or Technical File (CE mark) of the device.

Like IEC 60601-1, UL 2900-1 is made for certification of products by accredited laboratories, as of today by UL only.

Link with 62304 standard

IEC 62304 defines requirements on software lifecycle processes: development, maintenance, configuration management, and risk management.

UL 2900-1 defines requirements on product. Thus, UL 2900-1 will affect your software development and maintenance plans, as well as software requirement analysis, test plans and test reports required by IEC 62304.

In a few words: IEC 62304 is a process standard, UL 2900-1 is a product standard.

Remark: this is also true for IEC 82304-1

Product documentation

The standard begins with requirements on product documentation. A rough half of the documentation items listed in the standard are already required by regulations or other standards, like IEC 62304.

The second half is specific to UL 2900-1, and is the output of the other requirements found in the standard.

This product documentation will be reviewed by UL for evaluation and certification. If you want to get a certificate.

Technical requirements

The rest of the standard is composed of a set of technical requirements grouped by different topics:

- Risk controls applicable to software design,

- Access controls, user authentication,

- Use of cryptographically secure mechanisms,

- Remote communication integrity and authenticity,

- Confidentiality of sensitive data,

- Product management in post-market: security updates, decommissioning

- Validation of tools and processes from a security standpoint,

- Vulnerabilities exploits and software weaknesses,

The standards ends with requirements on testing strategies, code reviews, static and dynamic analysis, addressing the above topics.

Some requirements are very specific, like:

- Minimum length of passwords shall be at least 6 characters,

- The number of test cases in malformed input testing shall be 1 million cases or 8 hours.

The testing strategies that have to be implemented cover all levels of software architecture: source code, unitary level, and GUI/architectural level.

It demonstrates that being compliant to UL 2900-1 has a strong impact both on design phases and design verification phases (If by any chance you had a doubt :-).

Conclusion

UL 2900-1 is a set of prescriptive requirements applicable to the design, design verification and post-market phases of a medical device. It requires the implementation of a security risk management process, to ensure the compliance to its requirements.

No security risk management process is documented in UL 2900-1. You have to go to AAMI TRI 57 and, more general, to ISO 27005, to find state-of-the-art security risk management processes.

What happened to my Drug Prescription Assistance Software?

We know that Drug Prescription Assistance Software are software as a medical device, thanks to the European Court of Justice. But how to CE mark that kind of software?

What is its regulatory class?

Thanks to the rogue, the villainous rule 11 of the 2017/745/EU MDR, we know that our software is in class IIa. At least.

Such software is used to detect contraindications, drug interactions and excessive doses (see the last paragraph at the bottom of the page through the link to the European Court of Justice above). Contraindications, drug interactions and excessive doses are hazardous situations for the patient. What if we have a software failure leading to a false negative on one of these hazardous situations?

The bets are opened:

- In some cases, death of the patient, for instance if the medical personnel using the software doesn't detect the problem on a critical interaction, or

- More potentially, severe consequences to the patient leading to additional medical intervention, or

- If we are lucky, non-severe consequences to the patient.

Thus, thanks to the rogue, the villainous rule 11, our software is, depending on the context:

- In class III, or

- In class IIb, or

- In class IIa.

So, if we don't restrict by the intended use the type of drugs managed by our software, or the personnel using it, our software is potentially in Class III. I repeat C.L.A.S.S T.H.R.E.E.

Luck us, class I 93/42/CE MDD -> class III 2017/745/EU MDR.

What is its IEC 62304 safety class?

Same player shoot again.

Our software can lead to, depending on the context:

- Death or permanent injury, or injury requiring medical intervention: In class C, or

- Non-serious injury: In class B,

- Forget class A, unless you're able to demonstrate that you have a mitigation action outside software (e.g. a mandatory clinical protocol) leading to an acceptable risk of false negative.

Conclusion on classification

Once again, if we don't restrict the intended use, in other words, if we allow any kind of drug prescription in our software, we have the perfect combo:

2017/745/EU Class III and IEC 62304:2006+Amd1:2015 Class C.

CE mark

Preclinical data

Here, the path is well known, we have to apply the standards for software: IEC 82304-1, ISO 14971, IEC 62304 and IEC 62366-1. Plus standards on IFU, the future ISO 20417, and labelling ISO 15223-1.

No need to say that we have to polish our design control process, and the associated documents. No need to say that it will cost an arm and a leg.

Clinical data

Ah ah, article 61 of the MDR, here we are!

What does it says for Class III devices? Article 61.4, a clinical investigation is mandatory.

I'm absolutely not a specialist of clinical investigations, but I'm sure we have a little problem. If my software isn't restricted by its intended use, my software addresses any patient with any pathology. Defining the cohort of patients in the clinical investigation protocol is going to be tricky!

But, thinking out loud, my software doesn't have a clinical performance. It only displays information on drugs. It's the drugs, which support the clinical performance.

Non-clinical validation?

My software doesn't have any clinical performance, but only a technical performance:

- Displaying data coming from a thesaurus on drugs, and/or

- Computing drug doses from weight or body mass index, namely a rule of three, and/or

- Displaying some alerts based on thresholds found in the same thesaurus.

So I don't need a clinical investigation. Performance bench testing should be enough, that's what allows the article 61.10. But this article 61.10 begins with the legal jargon: without prejudice to 61.4.

Clinical investigation or not clinical investigation, that is the question.

Pliz, European Commission, we need a guidance, a common specification, whatever.

Conclusion

If you have a Drug Prescription Assistance Software to CE mark, craft very carefully the intended use. Or the probability of regulatory failure will be set to 1.

User stories or software requirements?

I frequently have the following discussion with software development teams: can user stories be taken as software requirements? The answer is yes or no. All cases can be found in nature!

When user-stories are not software requirements

This is the first choice. User stories are not software requirements. What are they, then?

Obscure answer for software development engineers: they can be seen as micro change requests!

Non-obscure answer: they can be seen as bribes of requirements, which will be used to instanciate new requirements and/or to update existing requirements.

Example:

- REQ1: The software shall display the images in true colors or inverted colors.

- REQ2: The control panel shall contain a checkbox to activate the inverted colors mode.

Here comes a new user story:

- As a user, I want to display the images in grey scale or in black and white.

We have to update our existing requirements to:

- REQ1: The software shall display the images in true colors, inverted colors, grey scale or black and white,

- REQ2: The control panel shall contain a combo box to choose the display modes found in REQ1.

The advantage of this method is to remain in the beaten track of software requirement specifications, as they're expected by regulatory reviewers.

The drawback is, well, to be less agile than managing user stories.

When user-stories are software requirements

This is the second choice. User stories are software requirements.

The formalism of user-stories matches well with the expectations on software requirements: As <a persona>, I want to <do something> so that I can <get a benefit>.

This formalism allows to express requirements in terms that avoid ambiguity and allows to establish acceptance tests criteria.

Non-functional requirements

At first sight, some categories of software requirements, the non-functional requirements (NFR) cannot be easily worded as user-stories (US). Fortunately, there's a solution: using persona who get a benefit from these NFRs.

Example 1:

- NFR: The system shall be able to export data in HL7 format,